UPDATE: This issue is now resolved in the 2019-05-21 cumulative update (KB4497934)

In the last couple of weeks, we have been hearing reports from customers who are encountering problems after migrating virtual machines directly from Windows Server 2012 R2 to Windows Server 2019. People are seeing error messages like the following:

Critical 03/01/2019 16:13:49 Hyper-V-Worker 18604 None

‘Test VM 1’ has encountered a fatal error but a memory dump could not be generated. Error 0x2. If the problem persists, contact Product Support for the guest operating system. (Virtual machine ID 90B45891-E0EB-4842-8070-F30FF25C663A)

Critical 03/01/2019 16:13:49 Hyper-V-Worker 18560 None

‘Test VM 1’ was reset because an unrecoverable error occurred on a virtual processor that caused a triple fault. If the problem persists, contact Product Support. (Virtual machine ID 90B45891-E0EB-4842-8070-F30FF25C663A)

We have been digging into these issues and have identified the root cause. In Windows Server 2019 we made several changes to the virtual machine firmware (a topic that I plan to blog about another day). In the process we unfortunately exposed a bug. The effect of the bug is that the firmware state on a version 5.0, Generation 2 virtual machine from Windows Server 2012 R2 cannot boot on Windows Server 2019.

Specifically – the bug is exposed by the IPv6 boot data that is stored in the firmware of a Generation 2 virtual machine. Note, this will not effect Generation 1 virtual machines.

We are actively working on a fix for this issue right now.

Workaround

In the meantime, it is possible to work around this. To get the virtual machine to boot you need to get Hyper-V to create new firmware entries for the IPv6 boot data. The easiest way to do this is to change the MAC addresses on any network adapters connected to the affected virtual machine. This process is different for virtual machines with dynamic and static MAC addresses.

Static MAC addresses

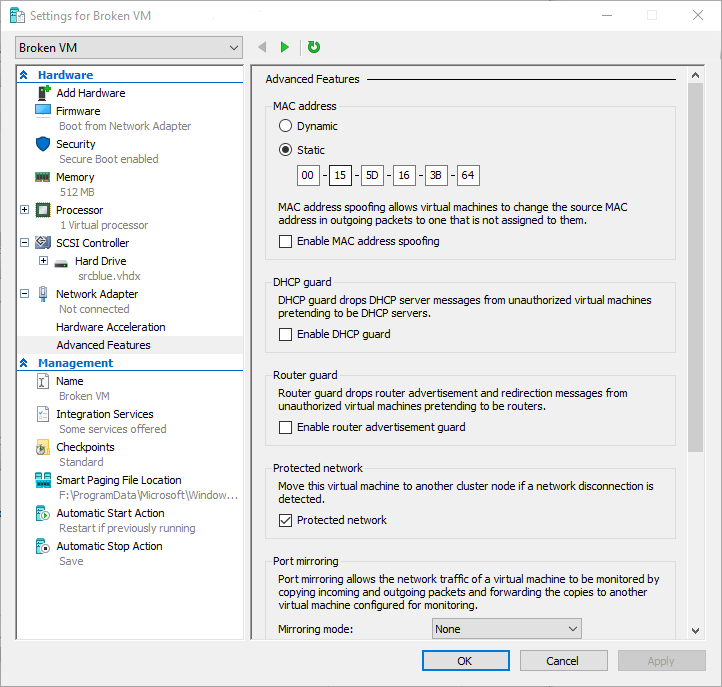

For virtual machines with network adapters that are set to use static MAC addresses – all you need to do is to open the virtual machine settings and change the MAC address to a new value:

I have also put together the following PowerShell snippet for people who like to automate things. This script will go through all network adapters on a virtual machine, find the ones with static MAC addresses, and increment them by 100.

# The name of the virtual machine that needs to be fixed

$VMname = "Broken VM"

# Iterate over all the network adapters in the virutal machine

Get-VMNetworkAdapter -VMName $VMname | % {

# Skip any network adapters that are using dynamic MAC addresses

if (!($_.DynamicMacAddressEnabled))

{

# Read the current MAC address, add 100, and set the new MAC address

$newMac = ([int64]"0x$($_.MacAddress)"+100).ToString("X").PadLeft(12,"0")

Set-VMNetworkAdapter -VMNetworkAdapter $_ -StaticMacAddress $newMac

}

} The reason why I chose to increment by 100 was incase people have consecutive MAC addresses.

Dynamic MAC addresses

If your virtual machine is using dynamic MAC addresses – it is possible that you will not hit this problem at all. There are a number of cases where Hyper-V will regenerate the MAC address automatically.

You can also force Hyper-V to regenerate dynamic MAC addresses by changing the dynamic MAC address pool range used by Hyper-V. Dimitris Tonias has written a great article on how to configure this that you should review.

Live Migration

One interesting note to make here – when you live migrate a virtual machine it does not boot through the firmware. This means that if you live migrate a virtual machine from Windows Server 2012 R2 to Windows Server 2019 it will continue to run. However it will not boot if you shut it down and try to start it again after the migration. In this situation the above work around will also address the problem.

My apologies to anyone who has been affected by this issue. hopefully we will have a fix out for this out soon!

Cheers,

Ben

I had this happen to me when I first attempting moving to Storage Spaces Direct back in October. I decided to postpone going straight to Server 2019, as I didn’t know a workaround at the time. I ended up setting up S2D with Server 2016. While my VMs were on Server 2016, I updated the VM configuration version to 8.0.

Two weekends ago, I migrated all of the VMs off of my S2D cluster and rebuilt with Server 2019 – don’t ask as its a long story. Now that I’ve migrated all of the VMs back on to the rebuilt cluster under WS2019, I still ran into this situation. Your workaround worked for one of the VMs.

My question is: What about Linux VMs which don’t like changing MAC addresses and other Windows VMs that run software which licenses with the MAC Address. ie. ShoreTel? I’m guessing I will need to bring them online after having changed the MAC address and then shutting them down and reverting back to the original MAC address. Just curious what your thoughts on the subject is. Thx!

++ on this. We ran into this exactly too. Moved about 30 VMs and almost all have static MACs, but only a couple actually ran into this bug. Of course one of them is the ShoreTel Director that is tied to the MAC address. 44 Days to go. Hopefully there is a fix soon.

Hi,

It doesn’t appear to be, however is there any link between this and Event 18550?

‘GuestVmName’ was reset because an unrecoverable error occurred on a virtual processor that caused a triple fault. This error might have been caused by a problem in the hypervisor. If the problem persists, contact Product Support. (Virtual machine ID)

These are VMs that will boot but will continuously reboot.

Benjamin – I have been testing a migration from Win2K12R2 for Gen2 VM’s to Win2K19 and have encountered this bug. We have a few VMs that have to have Static MAC’s due to the software on them is licensed to the MAC. It’s a big headache to relicense, thus the option to just change the Static MAC is not ideal. I unfortunately have not found any sequence to have it allow and boot using the pre-migration Static MAC (switching to dynamic and back, switching to different static and back, removing network adapter and re-adding, etc). Everytime I switch it back to the original, it won’t boot.

Have you found a sequence on how to get it to utilize the same Static MAC? (Or any update on when a fix will be out?)

Thank you,

Andrew

We are actively hitting this bug. Is there a fix yet or is there a place I can check on a regular basis for a fix? Thanks!!

Ben, I discovered this issue about two days after you posted this. Has there been any movement on a hotfix instead of the work around?

We just dealt with this issue yesterday after a weekend migration with VM’s that were version 8 generation 2. We also tested upgrading them to version 9 configuration to see if that alone would resolve the issue and it doesn’t.

Same here. I have hundreds of VMs with static MAC because they must retain their DHCP IP leases due to firewall rules.

What would you or MS suggest? Re-generating every MAC, altering DHCP reservations + Firewall rules would be the work of a few days.

Thanks Benjamin. Can you provide an update on this bug fix and once this bug is fixed, will Cluster Rolling Upgrade from 2012R2 to 2019 be supported?

In addition to refusing to boot we have also had a handful of VMs that will cease to run. My logs are full of Hyper-V Worker events 18604, 18560, and 18570. Usually the VMs keep running but once a week or so I check the host and the VMs are in the ‘off’ state. I then have to use Ben’s workaround and then the VM can be started again.

I am sad to hear that updating the VM’s configuration version will not help. Do you think that removing the existing virtual network adapter and creating a new one would be more of a permanent fix? I have the feeling that the answer will be ‘no’ and that we will all have to wait for an official fix from Microsoft.

I’m having this same issue today except my MAC addresses are all dynamic so the only thing I can do is keep re-creating the virtual switch with a different address. I cannot just have the machines turn off – I need to migrate them into a production environment. The VM’s all worked perfectly when they were built on my other Windows server 2012 R2 host. In just moving them onto this new host nothing is working. I have also tried to update the configuration – which now means that I can’t even move them to another host because they will not work at all.

I would really love to have a fix for this..

Any information regarding a permanent fix yet? I have 4 VM’s that I have to put into production all having this issue.

We’re hitting this bug both when moving version 5 VMs from 2012 R2 and version 8 VMs from 2016. I’m surprised there’s no official Microsoft support KB published on this? Do you have any insight into why there is no Microsoft support document? This work around is fixing the issue for us, but changing MACs requires downtime, which is very difficult in production environments. It also breaks some application licenses tied to MAC and can have very bad results on some virtual appliances (like F5). Is there going to be a fix from Microsoft soon that won’t require downtime and won’t require changing the MAC?

i also had to struggle with this, and you helped me. However, you dont need to change the Mac-Address. I have tested that if you stop the VM, change the setting to static mac, apply this, and than back to dynamic, the vm will start. Because i didnt have many vms that had this problem (maybe 15) i just did it in the gui manually. The VMs got new Mac-addresses. Also i had no problems with linux vms. They didnt lost their IPs. (OS is CentOS). If you have a static mac and have this issue, maybe just switch to another static address, apply the setting, and than switch back. But at that i really dont know.

We have had this bug on about 50 migrated VMs, almost all of them configured with dynamic MAC address. Cost us several days to rebuild the VMs around the existing VHDXs to get them working again. Thanks for the workaround – but it’s quite poor, that this has not been fixed even two months later. Greetings to Mr. Nadella for his great qualitiy policy…

“hopefully we will have a fix out for this out soon”

Any info about the fix?

Private Fix from Microsoft fixes this issue. Open a case and you’ll receive a private hot fix which seems to replace vmchipset.dll 10.0.17763.348 (all updates 2019-03-26) with vmchipset.dll 10.0.17763.391 (private hotfix).

Hi, I was hit by this after live-migrating VMs with static MACs from a 2012 R2 server to a 2019 server. The live migrate worked fine and the VM continued to run. However, three weeks later, when the VM applied regular monthly updates and tried to reboot, it failed to start up again after the updates-related shutdown. I was left with non-running and non-startable VMs the next morning. 🙁 I discovered the MAC address change work-around by experimentation.

Are there any updates as to when there will be a permanent fix?

i have received patch from Microsoft which helps.

other work around is to have 2016 hyper-v node in the cluster, failover vm to that node, start it and fail it back.

hope microsoft releases the patch soon.

i faced the issue first time during nov-dec 2018 when we did the upgrades from 2012r2 to 2016 and to 2019 of hyper-v.

initially we recreated the vms as the count was low but now its not possible considering the amount of work to be done.

mac address change along creates additional challenges including re-licensing the products which are dependent on the mac addresses.

Any official update to fix this?

Hi Ben,

thanks a lot for a solution. But in my case I had to set the Dynamic MAC back. Then it worked.

Any news about fix?

Good call, worked for me. Migrated a Gen2 VM from server 2016 to Win10 1809 and hit this bug. Changing the MAC address solved it.

If anyone is still having problems with this and finds this comment: KB4497934 is solving the problem, so you have to install the update and reboot your host 🙂

This issue is reportedly fixed in the 2019-05-21 cumulative update (KB4497934):

– Addresses an issue that prevents a Generation 2 virtual machine from starting on a Windows Server 2019 Hyper-V host. In the Microsoft-Windows-Hyper-V-Worker-Admin event log, Event ID 18560 displays, “VM name was reset because an unrecoverable error occurred on a virtual processor that caused a triple fault.”

Any word on a fix for this?

Looks like Rollup kb4497934 Addresses an issue that prevents a Generation 2 virtual machine from starting on a Windows Server 2019 Hyper-V host. In the Microsoft-Windows-Hyper-V-Worker-Admin event log, Event ID 18560 displays, “VM name was reset because an unrecoverable error occurred on a virtual processor that caused a triple fault.”

Will be testing this shortly.

Thanks for the post Benjamin,

Encountered the same Problem in a Migration. So this still isn’t fixed.

You cannot even do precautions, without taking the VM offline.

Applied the KB4497934 update, but we are still having the issue on some 2012 servers.

Benjamin,

We have the same issues, fix is already applied, but still the same boot issue.

Changing the mac address works, after that we change it back to the original mac address, and then it won’t boot.

Is there any other way to get rid or regenerate the firmware file?

Benjamin, just wanted to thank you for your solution. Worked for us also and saved us hours and hours of work ! Would never have found that alone.

Greetings from Switzerland. Mark.